Covariates in the detection function solution

Covariates in the detection function

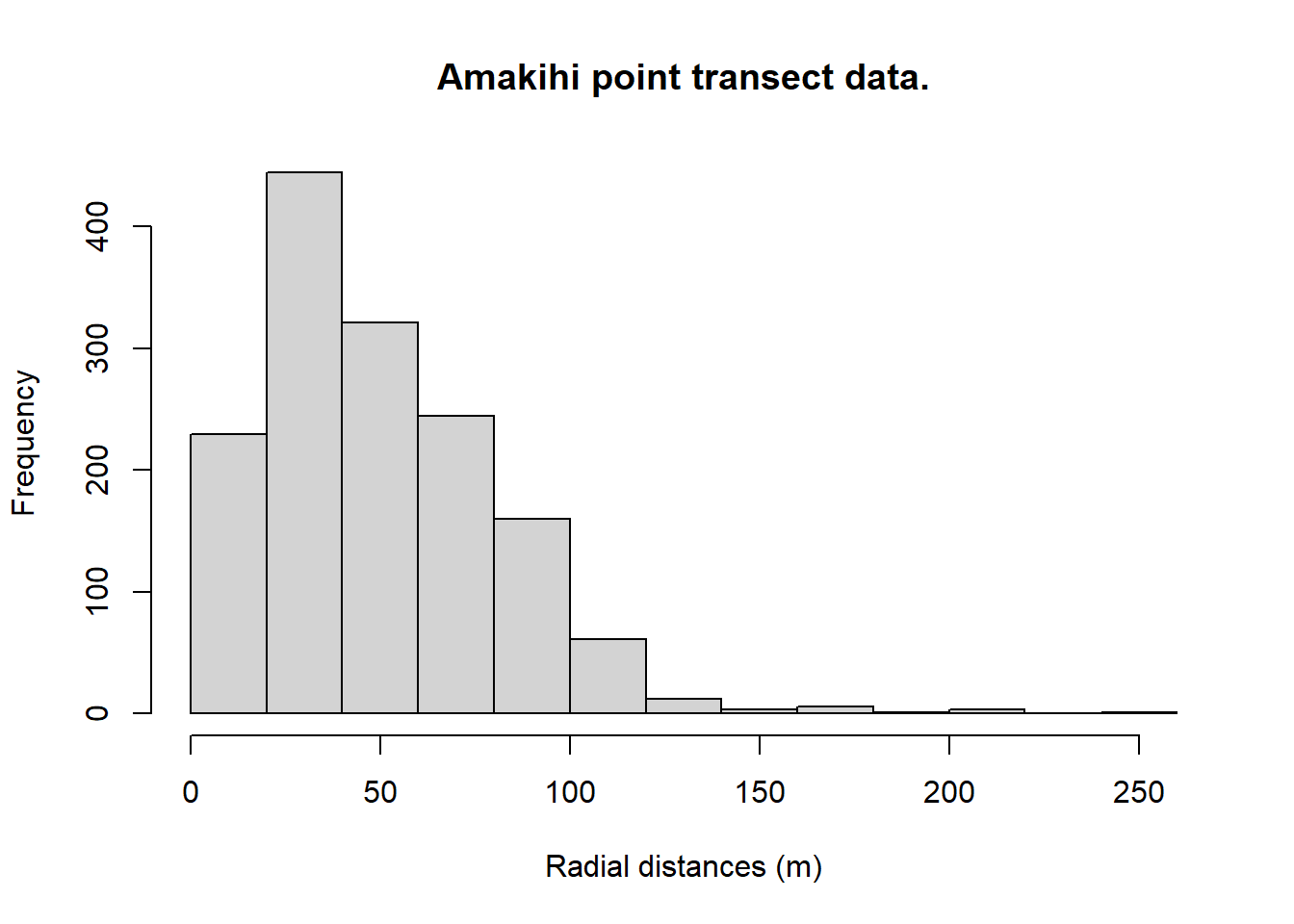

Covariates in point transect detection functions: Amakihi

A truncation distance of 82.5 m was chosen by T. A. Marques et al. (2007).

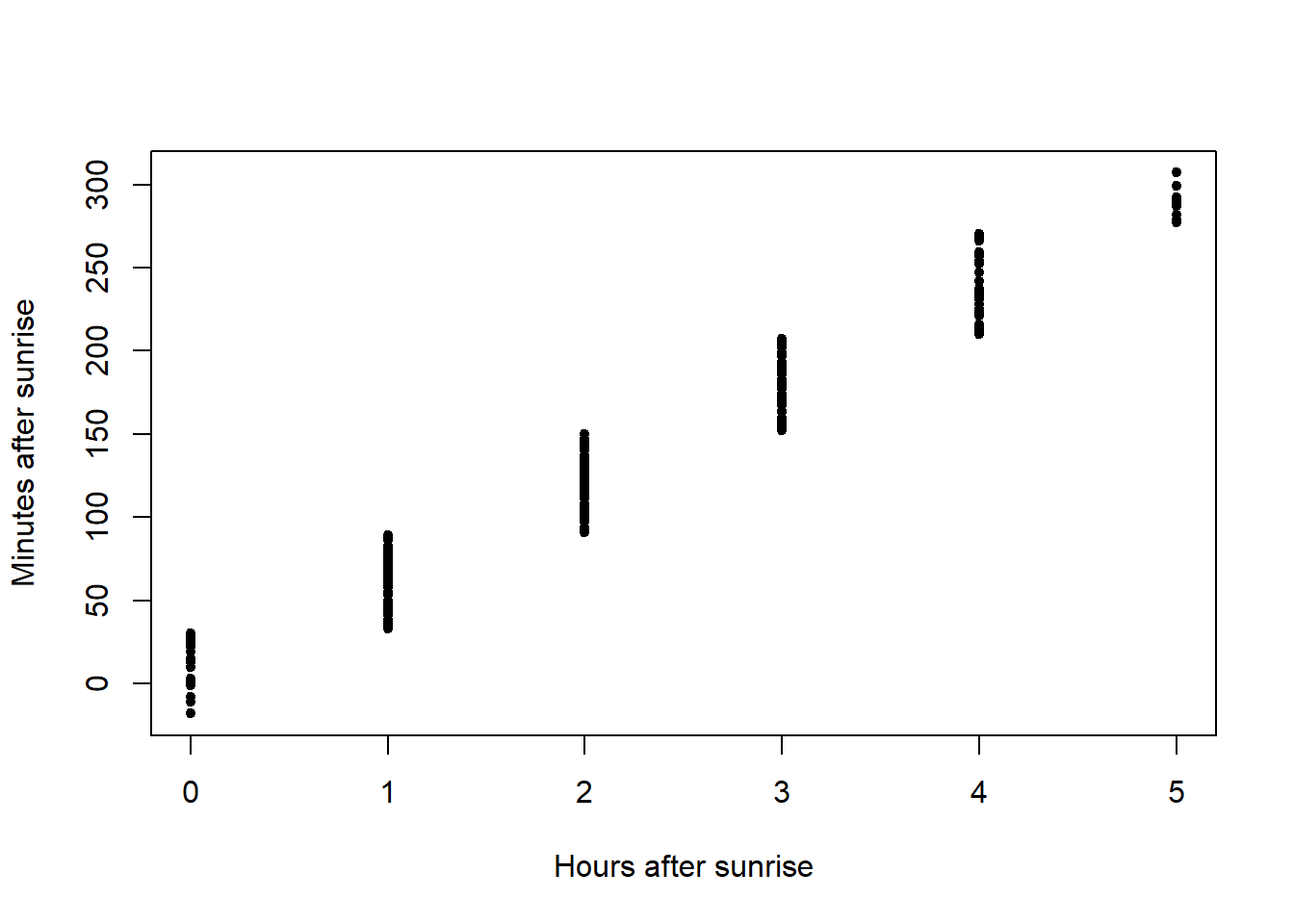

Plots of the covariates were generated. Not surprisingly, MAS and HAS are correlated and so we need to be cautious of including them in the same model.

boxplot(amakihi$distance~amakihi$OBs, xlab="Observer", ylab="Distance (m)")

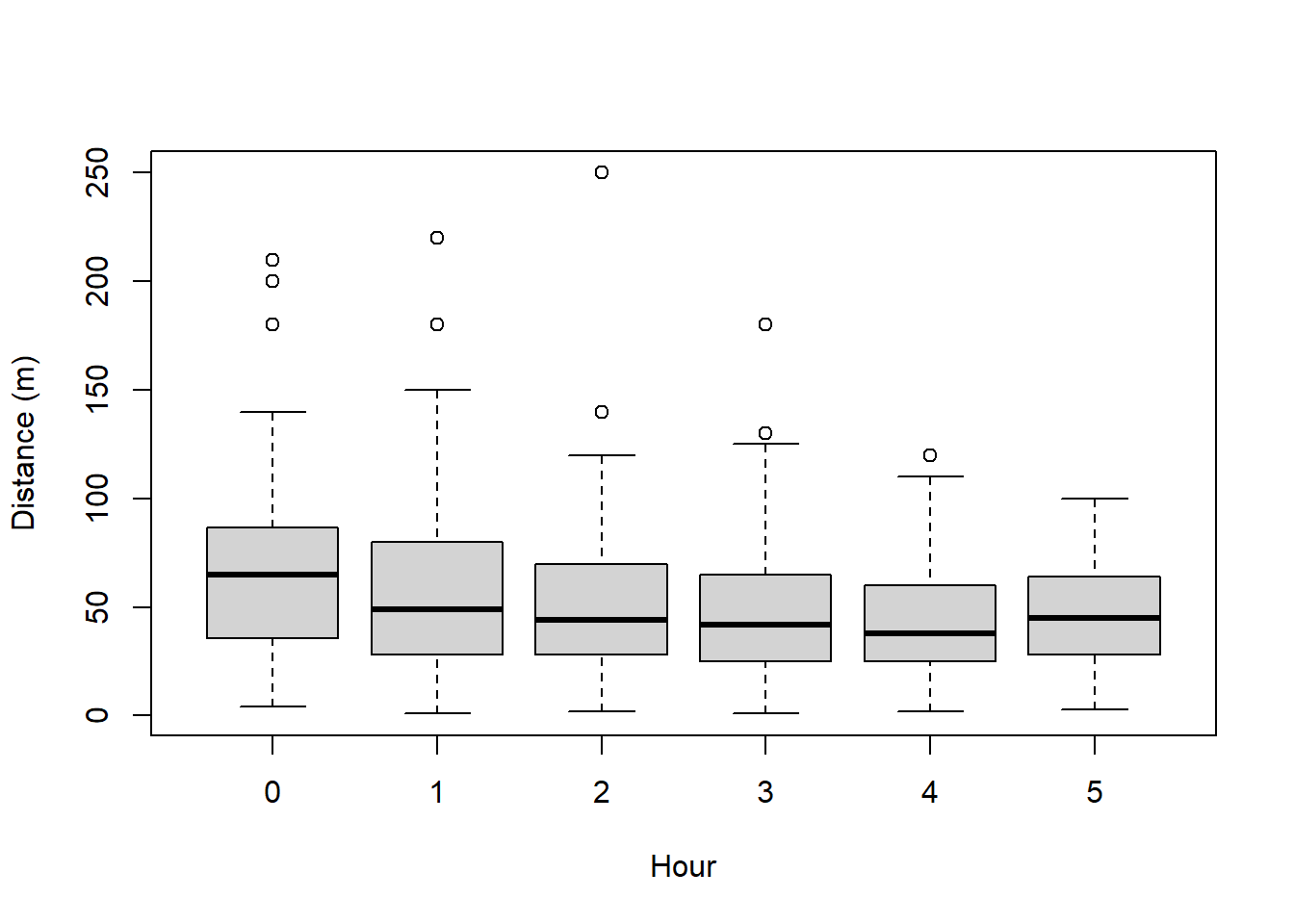

boxplot(amakihi$distance~amakihi$HAS, xlab="Hour", ylab="Distance (m)")

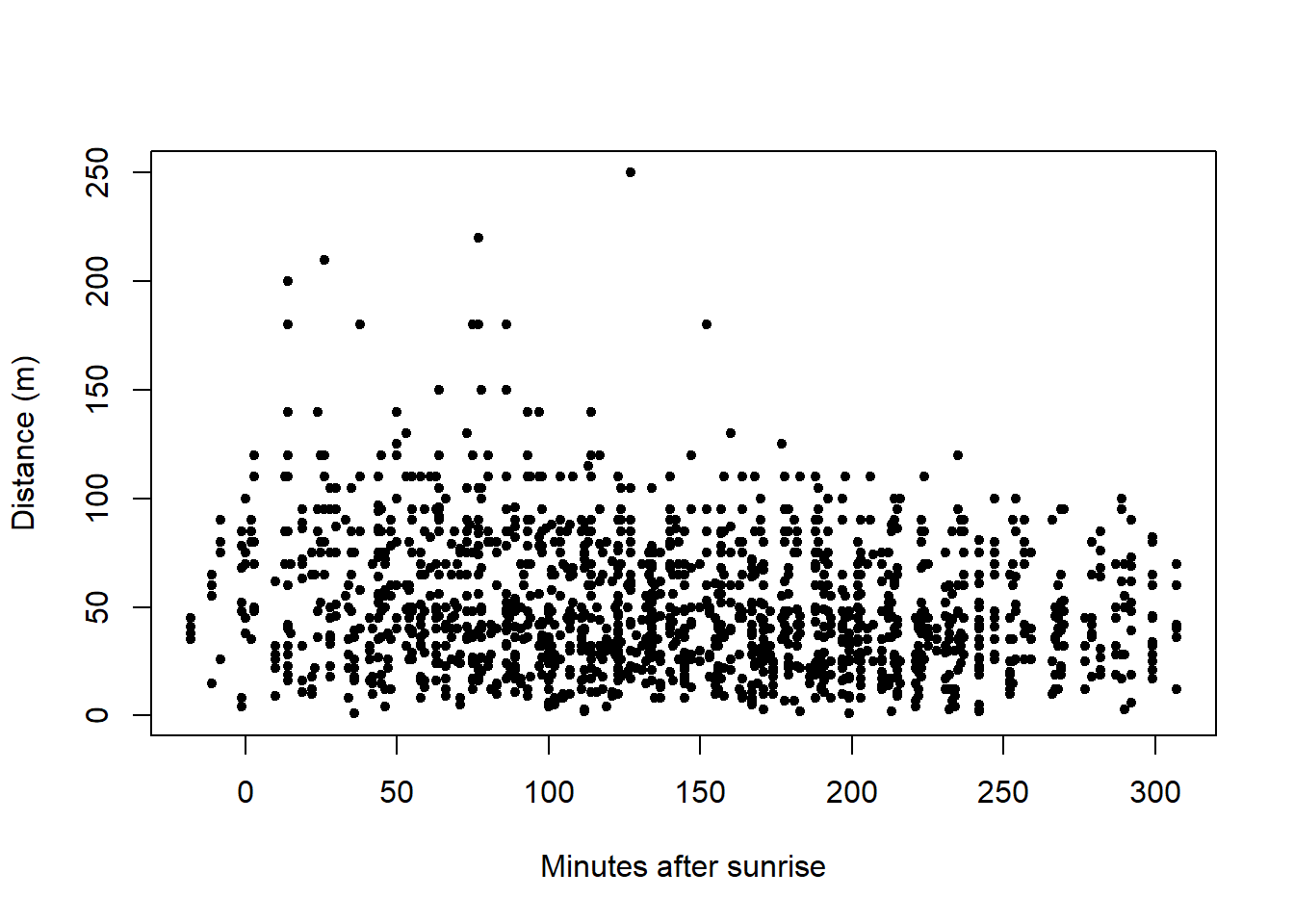

plot(x=amakihi$MAS, y=amakihi$distance, xlab="Minutes after sunrise",

ylab="Distance (m)", pch=20)

plot(x=amakihi$HAS, y=amakihi$MAS, xlab="Hours after sunrise",

ylab="Minutes after sunrise", pch=20)

Some rearrangement of the covariates, relevel simply shuffles the order of the observer and hour after sunrise factor covariates to conform to the analysis as presented in T. A. Marques et al. (2007).

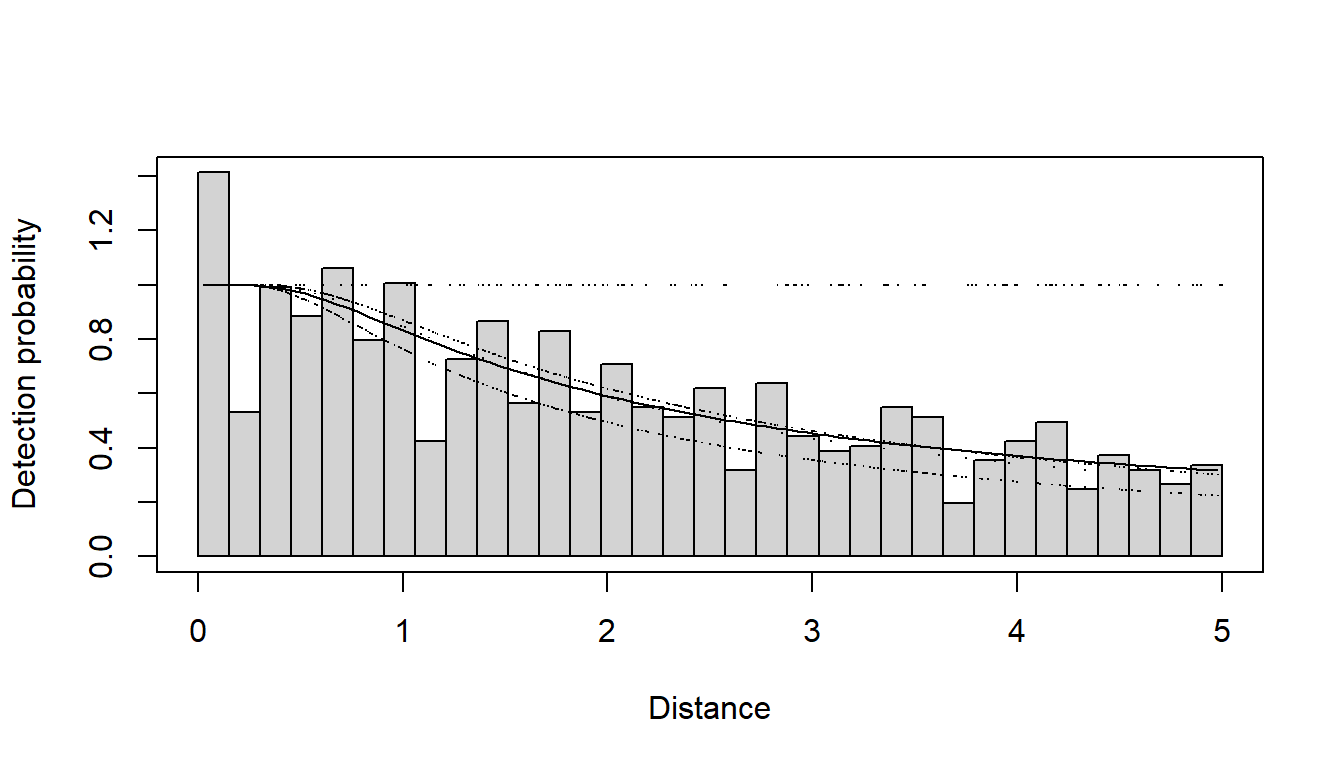

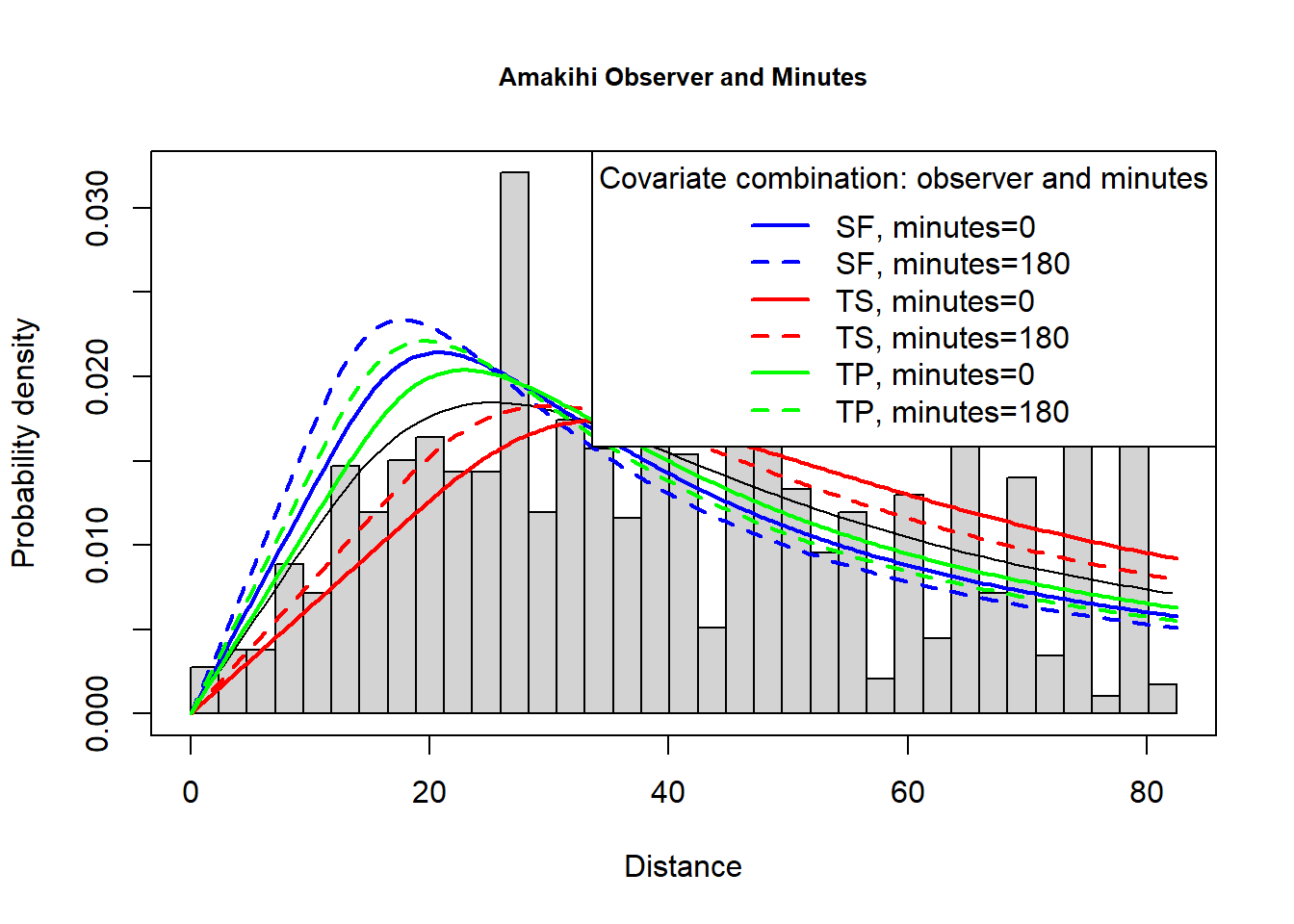

The model selected by T. A. Marques et al. (2007) used a hazard rate key function and included observer and minutes after sunrise (treating time as a continuous rather than discrete covariate)- this model is fitted below. The PDF plot is shown.

# Fit model selected by Marques et al (2007)

conv <- convert_units("meter", NULL, "hectare")

amak.hr.obs.mas <- ds(amakihi, transect="point", key="hr", formula=~OBs+MAS, convert_units = conv,

truncation=82.5)

plot(amak.hr.obs.mas, showpoints=FALSE, main="Amakihi Observer and Minutes", pdf=TRUE)

sfzero <- data.frame(OBs="SGF", MAS=0)

sf180 <- data.frame(OBs="SGF", MAS=180)

t1zero <- data.frame(OBs="TJS", MAS=0)

t1180 <- data.frame(OBs="TJS", MAS=180)

t2zero <- data.frame(OBs="TKP", MAS=0)

t2180 <- data.frame(OBs="TKP", MAS=180)

add_df_covar_line(amak.hr.obs.mas, data=sfzero, lty=1, lwd=2,col="blue", pdf=TRUE)

add_df_covar_line(amak.hr.obs.mas, data=sf180, lty=2, lwd=2,col="blue", pdf=TRUE)

add_df_covar_line(amak.hr.obs.mas, data=t1zero, lty=1,lwd=2,col="red", pdf=TRUE)

add_df_covar_line(amak.hr.obs.mas, data=t1180, lty=2, lwd=2,col="red", pdf=TRUE)

add_df_covar_line(amak.hr.obs.mas, data=t2zero, lty=1,lwd=2,col="green", pdf=TRUE)

add_df_covar_line(amak.hr.obs.mas, data=t2180, lty=2, lwd=2,col="green", pdf=TRUE)

legend("topright", legend=c("SF, minutes=0",

"SF, minutes=180",

"TS, minutes=0",

"TS, minutes=180",

"TP, minutes=0",

"TP, minutes=180"),

title="Covariate combination: observer and minutes",

lty=rep(c(1,2),times=3), lwd=2, col=rep(c("blue","red","green"), each=2))

A second round of model criticism

The model chosen by T. A. Marques et al. (2007) for making inference included minutes after sunrise (MAS) and observer (OBS) as covariates in the detection function. Did that model produce estimates of detection probability that were small for some detections? Recall, because we are using a Horwitz-Thompson-like estimator, our estimates of abundance are quite sensitive to detections with small values of \(\widehat{P_a(z_i)}\). Detections with small \(\widehat{P_a(z_i)}\) can have quite large impacts upon population estimates.

A new function in the most recent version of the Distance package, permits examination of the distribution of the \(\widehat{P_a(z_i)}\) so we can assess whether this analysis guideline is violated:

consider reducing the trunction distance if more than 5% of the \(\widehat{P_a(z_i)}\) are < 0.2, or if any are less than 0.1

| p | count | proportion |

|---|---|---|

| 0 - 0.1 | 0 | 0.000 |

| 0.1 - 0.2 | 167 | 0.134 |

| 0.2 - 0.3 | 106 | 0.085 |

| 0.3 - 0.4 | 515 | 0.414 |

| 0.4 - 0.5 | 455 | 0.366 |

| 0.5 - 0.6 | 0 | 0.000 |

This suggests redoing the analysis with stronger truncation. I’ll repeat the model fitting (without showing the code) using 70m as the truncation distance. What do we see when examining the distribution of the \(\widehat{P_a(z_i)}\) with the more stringent truncation?

| p | count | proportion |

|---|---|---|

| 0 - 0.1 | 0 | 0.000 |

| 0.1 - 0.2 | 0 | 0.000 |

| 0.2 - 0.3 | 207 | 0.184 |

| 0.3 - 0.4 | 48 | 0.043 |

| 0.4 - 0.5 | 678 | 0.603 |

| 0.5 - 0.6 | 191 | 0.170 |

Should this much truncation cause concern for point estimates and precision?

The news that model criticism should start over with a new truncation distance would not be good news. Concerns might also creep into your heads that removing data might affect the point estimates and possibly the precision. In this case, moving the truncation distance from 82.5m to 70m removed only 1.5% (19) detections, so it is unlikely that precision was affected.

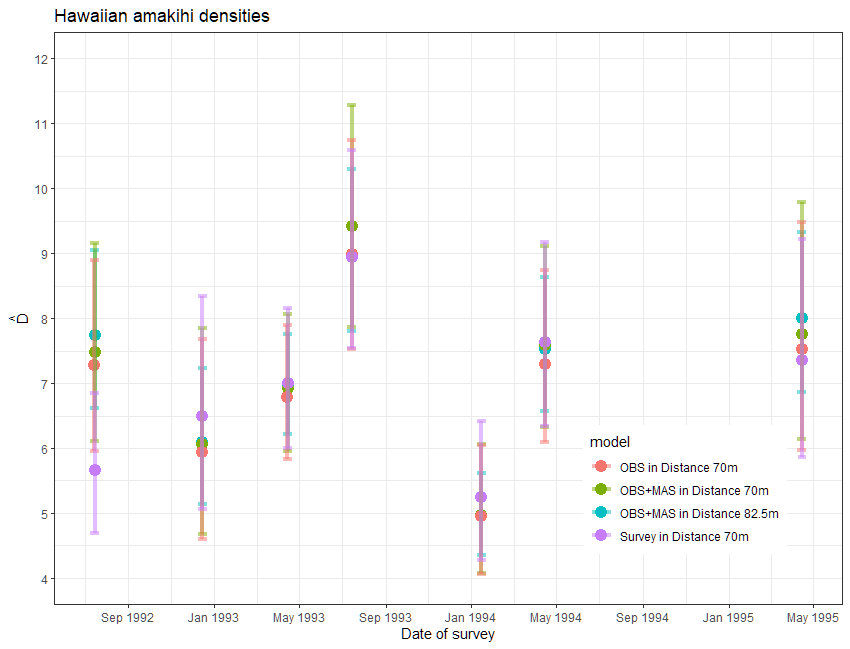

Regarding the effect upon the point estimates of amakihi density, let’s take a step back. If you were to examine the point and interval estimates for this data set from a number of detection function models, you would find the estimates are robust to model choice.

My impression

For this data set, the effort expended in discriminating between models with one vs two covariates as well as the angst about truncation distances had imperceptible effects upon the survey-specific density estimates. In fact, a model simply using survey replicate as a covariate produced largely the same density estimates. Understanding the temporal dynamics of the amakihi population was not influenced by covariate modelling.

More MCDS with line transects: ETP dolphins (optional)

We have not provided a comprehensive analysis of these data but have highlighted a few general feature of these data. A more complete analysis can be found in F. F. C. Marques & Buckland (2003).

Region.Label Area Sample.Label Effort object distance LnCluster Month

1 Default 0 1 1 1 2.25 6.792344 6

2 Default 0 1 1 2 3.15 7.080868 6

3 Default 0 1 1 3 3.00 5.181784 6

Beauf.class Cue.type Search.method size Study.Area

1 2 3 0 891 Dolphin

2 2 1 2 1189 Dolphin

3 2 1 2 178 Dolphin Variable Units

1 Effort nautical mile

2 distance nautical mile

3 Area square nautical mileNotice that effort and perpendicular distances are both measured in nautical miles and that density is to be reported in animals per square nautical mile, so the conversation factor in this case is 1 and we do not represent it here.

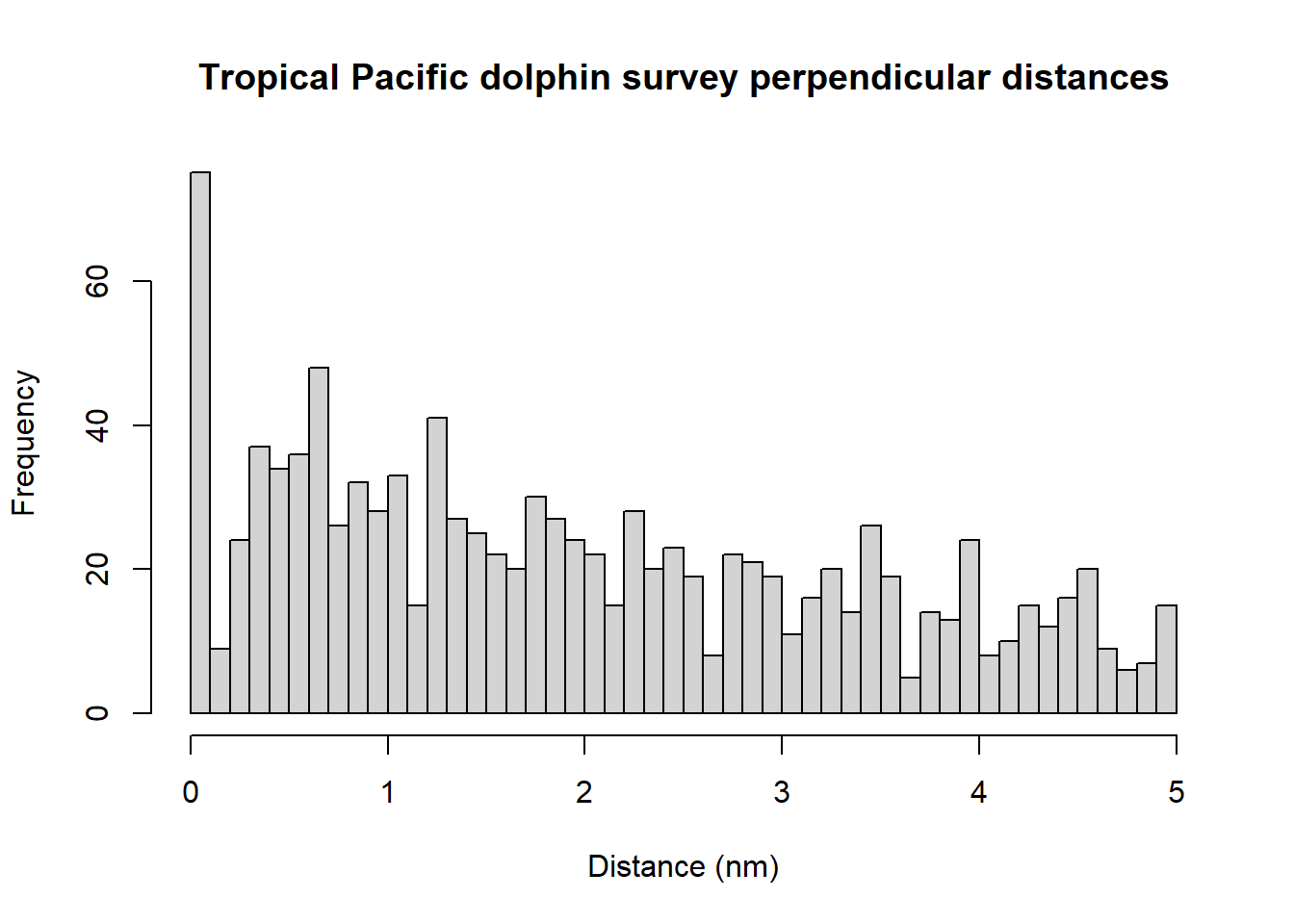

To obtain an overall impression of the data, it is useful to fit a histogram with many intervals.

hist(ETP_Dolphin$distance, nclass=50, xlab="Distance (nm)",

main="Tropical Pacific dolphin survey perpendicular distances")

The spikes in the histogram suggest that distances have been rounded to zero and possibly other values. To mitigate these problems, the distances could be binned although we do not do so in the analysis below. The distances have already been truncated at 5 nm and so we will not truncate distances further.

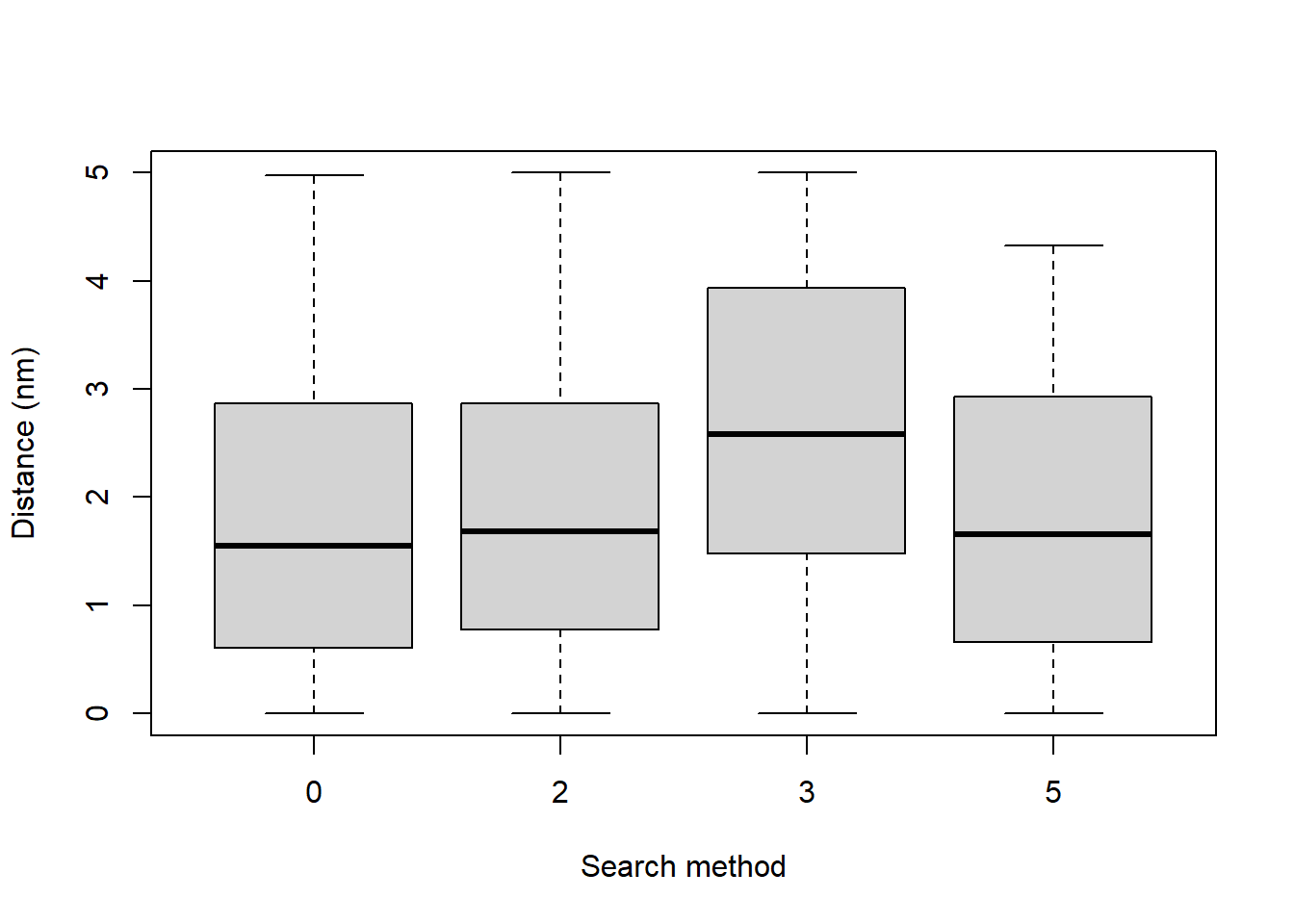

boxplot(ETP_Dolphin$distance~ETP_Dolphin$Search.method, xlab="Search method",

ylab="Distance (nm)")

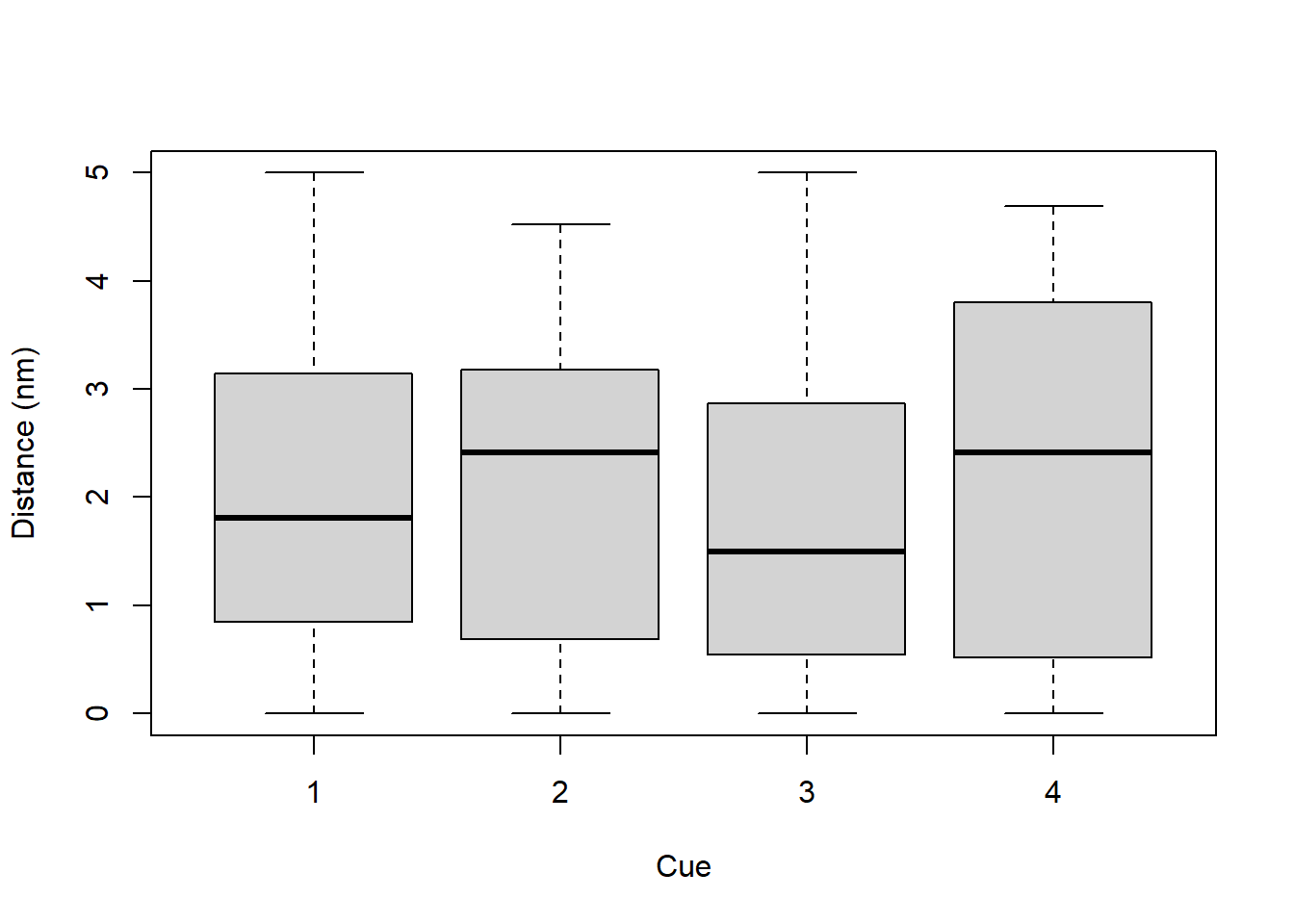

boxplot(ETP_Dolphin$distance~ETP_Dolphin$Cue.type, xlab="Cue", ylab="Distance (nm)")

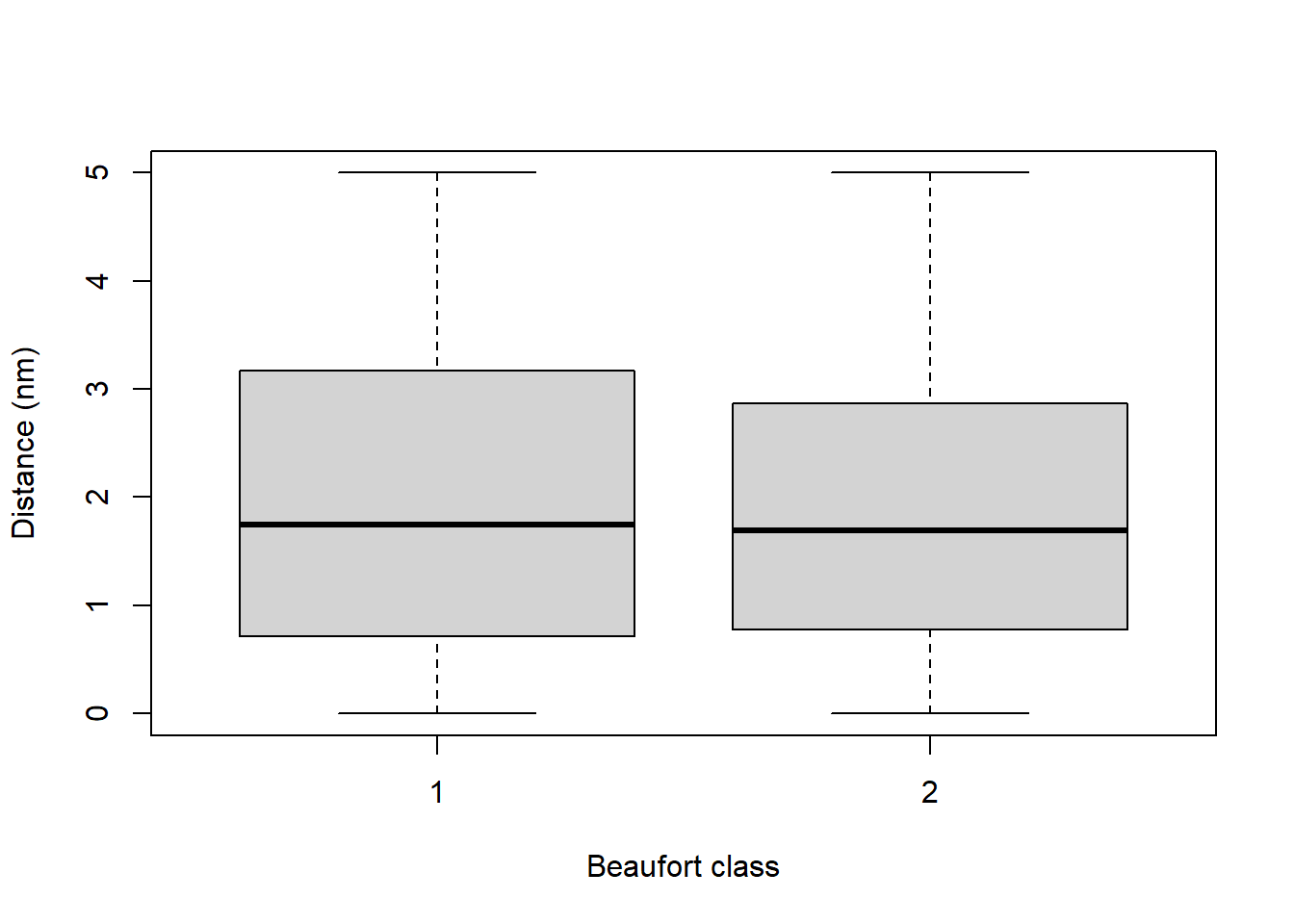

boxplot(ETP_Dolphin$distance~ETP_Dolphin$Beauf.class, xlab="Beaufort class",

ylab="Distance (nm)")

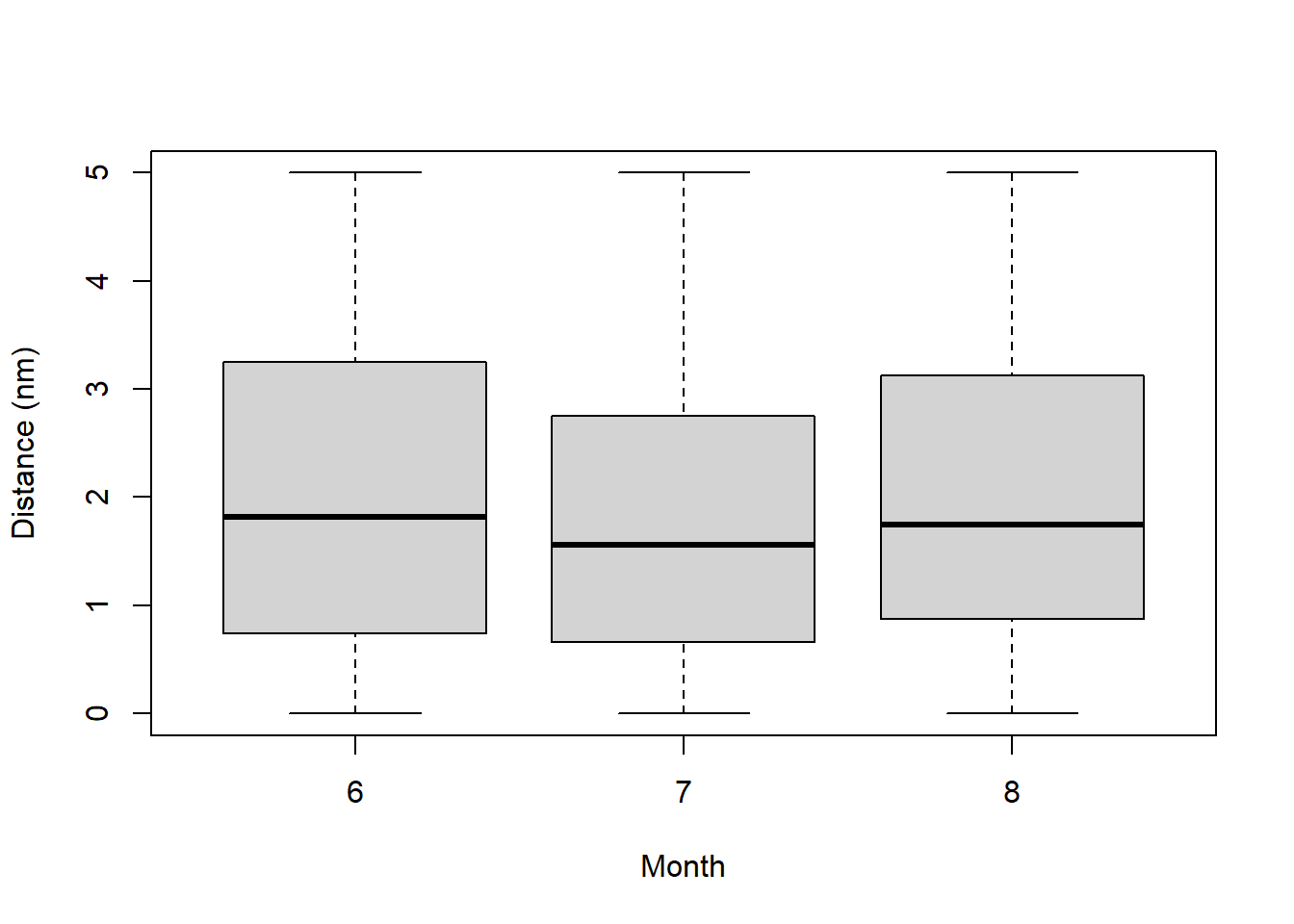

boxplot(ETP_Dolphin$distance~ETP_Dolphin$Month, xlab="Month", ylab="Distance (nm)")

To decide whether to fit a half normal or a hazard rate key function, each of these is tried in turn.

etp.hn <- ds(ETP_Dolphin, key="hn", adjustment=NULL)

etp.hr <- ds(ETP_Dolphin, key="hr", adjustment=NULL)

knitr::kable(as.data.frame(AIC(etp.hn, etp.hr))) %>%

kable_styling(bootstrap_options = "condensed", full_width = F) | df | AIC | |

|---|---|---|

| etp.hn | 1 | 3377.489 |

| etp.hr | 2 | 3365.915 |

The AIC values suggest that hazard rate key function is preferable to the half normal and so this will be used as the key function in the MCDS models. Each covariate is introduced in turn.

etp.hr.search <- ds(ETP_Dolphin, key="hr", formula=~factor(Search.method))

etp.hr.cue <- ds(ETP_Dolphin, key="hr", formula=~factor(Cue.type))

etp.hr.bf <- ds(ETP_Dolphin, key="hr", formula=~factor(Beauf.class))

etp.hr.month <- ds(ETP_Dolphin, key="hr", formula=~factor(Month))

knitr::kable(summarize_ds_models(etp.hr, etp.hr.search, etp.hr.cue, etp.hr.bf, etp.hr.month,

output="plain")[,2:7], row.names = FALSE,

caption="ETP dolphin model selection.", digits=3) %>%

kable_styling(bootstrap_options = "condensed", full_width = F) | Key function | Formula | C-vM $p$-value | Average detectability | se(Average detectability) | Delta AIC |

|---|---|---|---|---|---|

| Hazard-rate | ~factor(Search.method) | 0.388 | 0.584 | 0.036 | 0.000 |

| Hazard-rate | ~factor(Cue.type) | 0.449 | 0.587 | 0.039 | 23.314 |

| Hazard-rate | ~factor(Month) | 0.494 | 0.593 | 0.039 | 24.264 |

| Hazard-rate | ~1 | 0.461 | 0.601 | 0.038 | 26.140 |

| Hazard-rate | ~factor(Beauf.class) | 0.474 | 0.601 | 0.039 | 27.993 |

Based on the AIC, it seems as though the model including search method was preferable and we could continue the model selection process by looking at models with two covariates. However, before going on it is worth looking at the search method model in more detail. If we look at the detection function parameters for this model:

Distance sampling analysis object

Summary for ds object

Number of observations : 1090

Distance range : 0 - 5

AIC : 3339.775

Optimisation : mrds (nlminb)

Detection function:

Hazard-rate key function

Detection function parameters

Scale coefficient(s):

estimate se

(Intercept) 0.3355272 0.2072090

factor(Search.method)2 0.3208247 0.2112992

factor(Search.method)3 3.1274315 78.5965032

factor(Search.method)5 0.2430573 0.5378512

Shape coefficient(s):

estimate se

(Intercept) 0.07827832 0.1387482

Estimate SE CV

Average p 0.5837971 0.03602487 0.06170786

N in covered region 1867.0869397 121.18692980 0.06490696we see that the estimated scale coefficient for search method 3 is substantially larger than the estimated scale coefficients for other methods. The effect this has on the detection function is clearly seen in the detection function plot.

Search method 3 indicated that the detection was from a helicopter and this detection function suggests that all dolphin schools out to 5 nm were being detected and so detection does not decrease as distance increases. One assumption of MCDS is that the perpendicular distance distributions of the covariate factor levels have the same shape and so it may be worth refitting the models but excluding the observations made by the helicopter.

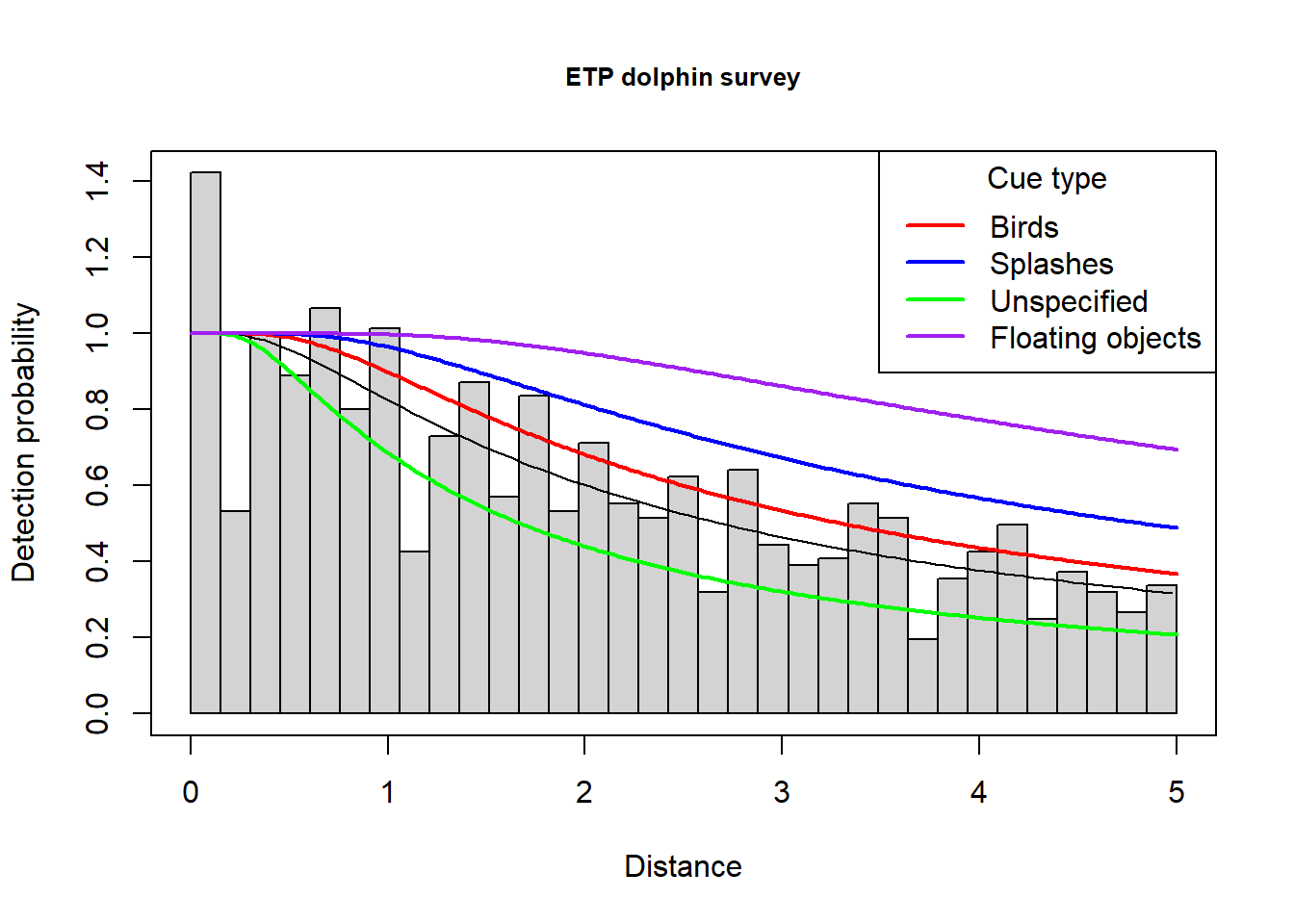

Colourful plot noting effect of cue type

Just an example of using the function add_df_covar_line to visually explore consequences of covariates on the detection function. A regular call to plot() is first used to produce the histogram and average detection function line; subsequent calls to the new function with different values of the covariate of interest completes the plot.

plot(etp.hr.cue, main="ETP dolphin survey", showpoints=FALSE)

add_df_covar_line(etp.hr.cue, data = data.frame(Cue.type=1),

col='red', lwd=2, lty=1)

add_df_covar_line(etp.hr.cue, data = data.frame(Cue.type=2),

col='blue', lwd=2, lty=1)

add_df_covar_line(etp.hr.cue, data = data.frame(Cue.type=3),

col='green', lwd=2, lty=1)

add_df_covar_line(etp.hr.cue, data = data.frame(Cue.type=4),

col='purple', lwd=2, lty=1)

legend("topright",

legend=c("Birds","Splashes","Unspecified","Floating objects"),

col=c("red", "blue", "green", "purple"), lwd=2, title = "Cue type")